The Optispeech project involves designing and testing a real-time tongue model that can be viewed in a transparent head while a subject talks — for the purposes of treating speech errors and teaching foreign language sounds. This work has been conducted in partnership with Vulintus and with support from the National Institutes of Health (NIH).

Video Demo #1

https://www.youtube.com/watch?v=9uHqIRs7ZjM?w=375&h=211

This video shows a talker with WAVE sensors placed on the the tongue hitting a virtual target sphere located at the alveolar ridge. When an alveolar consonant is hit (e.g., /s/, /n/, /d/) the sphere changes color from red to green.

Video demo #2

https://www.youtube.com/watch?v=Oz42mKvlzqI?w=375&h=211

This video shows an American talker learning a novel sound not found in English. When the post-alveolar consonant is hit, the target sphere changes color from red to green. Here, the NDI WAVE system serves as input. A version for the Carstens AG501 EMA system is also being built.

For More Information:

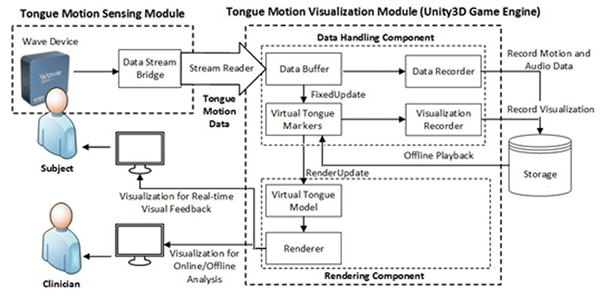

Katz, W., Campbell, T., Wang, J., Farrar, E., Eubanks, J., Balasubramanian, A., Prabhakaran, B., and Rennaker, R. (2014). Opti-Speech: A real-time, 3D visual feedback system for speech training. Interspeech, 1174-1178.

Watkins, C. H. (2015). Sensor driven realtime animation for feedback during physical therapy (Order No. 1593789). Available from Dissertations & Theses @ University of Texas at Dallas; ProQuest Dissertations & Theses Global. (1705881361).